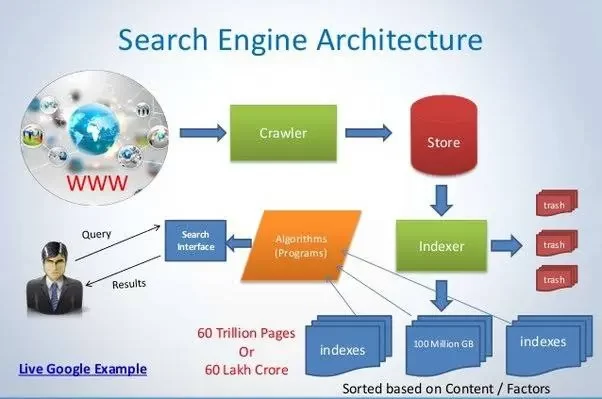

You placed your queries on Google and it highlights effects within seconds, right? But, have you ever imagined, what the activities worried about this no-time technique? Let’s find out about what Crawling & indexing Generally, indexing refers to a way of records acquisition (information development). Also, look where the files are amassed and looked after primarily based totally on keywords.

Subsequently, an index is formed that is much like a library. The listed files, typically textual content content material, get organized for a look for a selected report or keyword and are supplied with descriptors. If you search for a keyword and the associated files, preferably the maximum applicable content material gets displayed.

In a library, descriptors can be data consisting of the author, identify, or ISBNs. As per the principle, the equal issue takes place with a question on the Internet. Explaining the concept in different words, the term indexing denotes the formation of an index wherein internet files are amassed and looked after by the use of numerous descriptors (any such keywords) and made to be had for next searches (information retrieval).

Indexing is a pastime thru which the internet site content material is delivered to Google and for this reason profits visibility on SERP. How is a brand-new web page listed?

There are some approaches thru which a domain may be listed. Let’s examine the maximum desired ones:

(i) The very easy technique is to do nothing. However, wait, because the web page receives listed with the aid of using itself after being crawled. Google sooner or later discovers the brand new content material and for this reason indexes it.

(ii) The 2nd manner is choosing Googlebot to index the web page more quickly. Mostly, Google Bots are used if you have made a brand new extrude and need Google to understand and spotlight it through SERP.

Using the second technique is the maximum desired if you have to make significantly vital amendments and introductions visible. The conditions might also additionally consist of:

(i) When an essential web page is optimized

(ii) The identity and outlines were made over for growing the click-throughs

(iii) To examine the precise timing while the web page turned into displayed on SERP to degree the development statistics.

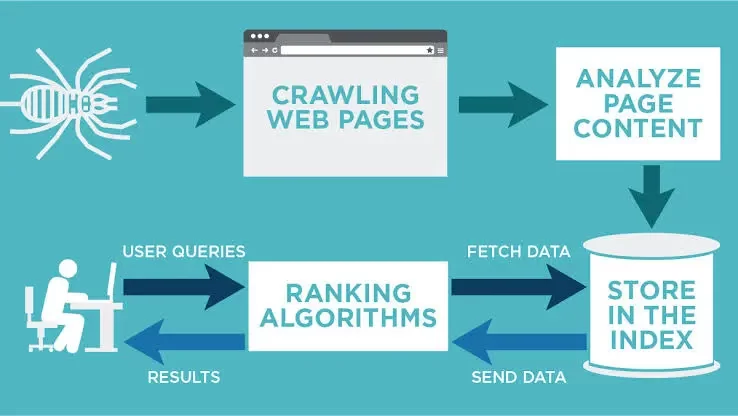

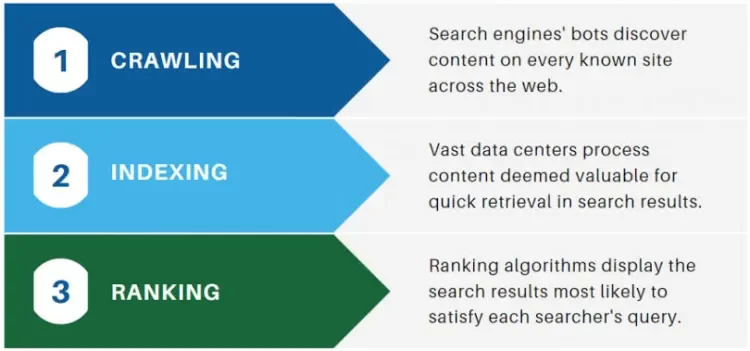

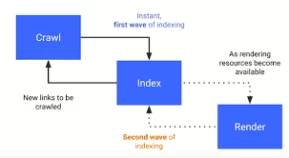

It is the following step wherein all of the content material amassed through crawling is saved and prepared in the database. Once the web page is listed, it turns into a part of seek engine effects. The indexing of internet files is an intensive and complicated technique. It makes use of numerous techniques of records science, laptop science, and computational linguistics.

In addition to records development (defined above) and records retrieval, every other vital period is statistics mining which is the checking out of precious content material from a huge quantity of statistics. Various approaches related to indexing arise earlier than a seek period is entered. Web files have to be searched and parsed (see Crawlers, Spiders, Bots).

These are amassed, looked after, and hierarchized in an index earlier than they may be displayed in the SERPs of search engines in a specific series. Search engine vendors consisting as Google, Yahoo, and Bing are continuously running to enhance the indexing of websites. The aim is to offer the maximum applicable content material. Google has these days modified its index and brought the Caffeine Index.

It is meant to consist of internet content material quicker in the index with the aid of using continuously looking positive elements of the worldwide Internet synchronously. Moreover, internet content material consisting of motion pictures or podcasts is intended to be discovered extra easily.

Different outcomes and opportunities rise for web website online operators and site owners regarding indexing. If an internet web page is to be listed and discovered in the index, it has to first be to be had for the crawler or spider. If it’s for a brand new internet site. It could be submitted to the quest engine. The aim is to get protected in the index with the aid of using registering it.

The internet site has to be findable with the aid of using the crawler and readable to a positive degree. Metatags, which may be indexed in the head phase of a webpage, are a manner to make sure of this. They also can be used to suppress getting admission to crawlers so that you can exclude a specific web page from the index. Canonical tags and different tags in the robots.txt document also can be used for this purpose.

The indexing reputation may be retrieved in the Google Search Console. URLs that could already be discovered in the index are displayed beneath neath the Google index and Indexing repute tabs. That consists of the ones which have been blocked with the aid of using the web website online operator.

Indexing may be very vital for seeking engine optimization. Webmasters and placement operators can manage this technique from the beginning. Also, it can make sure that internet pages are crawled, and listed, after which it is displayed in the SERPs. However, their role at the SERPs can simplest be encouraged with numerous on-web page and off-web page measures. Also, it gives the availability of excessive exceptional content material.

Crawl is a preliminary and critical Google interest that includes spreading and looking on the Internet for the content material.

In this system, every URL is investigated that comes at the side of the specific internet site. Crawling Activities included in crawling:

(i) Scans and analyzes all of the trendy released websites.

(ii) Analyze the latest modifications in the present websites.

(iii) Scans the websites for useless connections.

(iv) Scans all of the pages of the internet site related thru URL .

(v) Crawls the pages in keeping with the internet site proprietor’s desire. Although crawling is a Google interest, even site proprietors can customize their crawling collection on their internet sites.

Search Console offers a desire to the internet site proprietors to determine the collection wherein he favors the scan. Using Search Console, the proprietor can teach the system of crawling. It may even request a recrawl or even he can command the crawler to give up its interest.

Crawl budget is the range of sources that have been used by Google to move slowly and index the internet site. It is an important dialogue term whilst we speak about indexing. The crawl budget is depending on the primary elements:

(i) The quality of your server – how rapidly does your server reply to move slowly without affecting the UX of your site?

(ii) How rapidly do you need your content material to be listed and crawled? Information replace internet site wishes to be crawled and listed in precedence in comparison to a nearby internet site walking on a small and regular business. The previous wishes for extra finances than later.

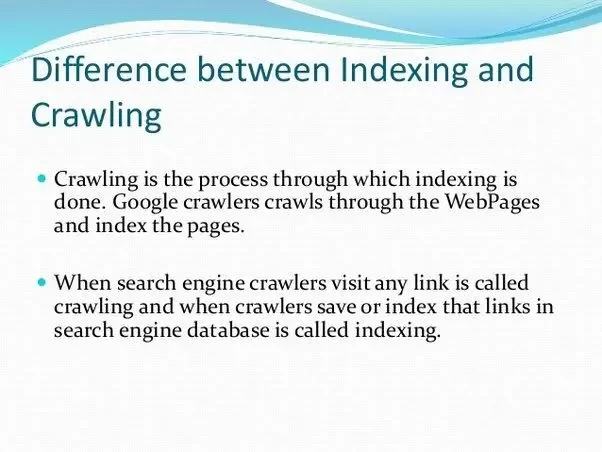

Crawling and indexing in SEO are particularly related to every other. But, each has a sure figuring out elements that degree their compatibility with the SERP. These elements ought to hold in thought to remedy the difficulty of ‘why my web page isn’t always getting listed’.

I wish that this has cleared the standards of what’s crawling and indexing. It may have cleared when & how those become independent of every other. Also, what’s the distinction between crawling and indexing in search engine marketing? Crawling and Indexing an important chapter of the search engine marketing industry.

These are the two very preliminary activities that ought to be clean to the minds of Digital Marketers. I have attempted to cover the total and critical standards of each topic. If you desire to begin a profession in seek engine optimization, here’s it. Undergo the above sections and recognize the fundamentals of search engine marketing. Then, do a Search Engine Optimization direction to benefit the understanding of the domain.

There are numerous methods to begin a profession in it. You may do a digital marketing course as in line with your suitability and pursue a profession in it.

We provide online certification in Data Science and AI, Digital Marketing, Data Analytics with a job guarantee program. For more information, contact us today!

Courses

1stepGrow

Anaconda | Jupyter Notebook | Git & GitHub (Version Control Systems) | Python Programming Language | R Programming Langauage | Linear Algebra & Statistics | ANOVA | Hypothesis Testing | Machine Learning | Data Cleaning | Data Wrangling | Feature Engineering | Exploratory Data Analytics (EDA) | ML Algorithms | Linear Regression | Logistic Regression | Decision Tree | Random Forest | Bagging & Boosting | PCA | SVM | Time Series Analysis | Natural Language Processing (NLP) | NLTK | Deep Learning | Neural Networks | Computer Vision | Reinforcement Learning | ANN | CNN | RNN | LSTM | Facebook Prophet | SQL | MongoDB | Advance Excel for Data Science | BI Tools | Tableau | Power BI | Big Data | Hadoop | Apache Spark | Azure Datalake | Cloud Deployment | AWS | GCP | AGILE & SCRUM | Data Science Capstone Projects | ML Capstone Projects | AI Capstone Projects | Domain Training | Business Analytics

WordPress | Elementor | On-Page SEO | Off-Page SEO | Technical SEO | Content SEO | SEM | PPC | Social Media Marketing | Email Marketing | Inbound Marketing | Web Analytics | Facebook Marketing | Mobile App Marketing | Content Marketing | YouTube Marketing | Google My Business (GMB) | CRM | Affiliate Marketing | Influencer Marketing | WordPress Website Development | AI in Digital Marketing | Portfolio Creation for Digital Marketing profile | Digital Marketing Capstone Projects

Jupyter Notebook | Git & GitHub | Python | Linear Algebra & Statistics | ANOVA | Hypothesis Testing | Machine Learning | Data Cleaning | Data Wrangling | Feature Engineering | Exploratory Data Analytics (EDA) | ML Algorithms | Linear Regression | Logistic Regression | Decision Tree | Random Forest | Bagging & Boosting | PCA | SVM | Time Series Analysis | Natural Language Processing (NLP) | NLTK | SQL | MongoDB | Advance Excel for Data Science | Alteryx | BI Tools | Tableau | Power BI | Big Data | Hadoop | Apache Spark | Azure Datalake | Cloud Deployment | AWS | GCP | AGILE & SCRUM | Data Analytics Capstone Projects

Anjanapura | Arekere | Basavanagudi | Basaveshwara Nagar | Begur | Bellandur | Bommanahalli | Bommasandra | BTM Layout | CV Raman Nagar | Electronic City | Girinagar | Gottigere | Hebbal | Hoodi | HSR Layout | Hulimavu | Indira Nagar | Jalahalli | Jayanagar | J. P. Nagar | Kamakshipalya | Kalyan Nagar | Kammanahalli | Kengeri | Koramangala | Kothnur | Krishnarajapuram | Kumaraswamy Layout | Lingarajapuram | Mahadevapura | Mahalakshmi Layout | Malleshwaram | Marathahalli | Mathikere | Nagarbhavi | Nandini Layout | Nayandahalli | Padmanabhanagar | Peenya | Pete Area | Rajaji Nagar | Rajarajeshwari Nagar | Ramamurthy Nagar | R. T. Nagar | Sadashivanagar | Seshadripuram | Shivajinagar | Ulsoor | Uttarahalli | Varthur | Vasanth Nagar | Vidyaranyapura | Vijayanagar | White Field | Yelahanka | Yeshwanthpur

Mumbai | Pune | Nagpur | Delhi | Gurugram | Chennai | Hyderabad | Coimbatore | Bhubaneswar | Kolkata | Indore | Jaipur and More