Welcome to Part 7 of our Pandas series! In this installment, we delve into the exciting realm of Times and dates in pandas using python. Time and date in pandas, prevalent in various fields like finance, economics, and climate science, often comes with unique challenges. Fortunately, Pandas equips you with powerful tools to handle, manipulate, and gain insights from such data.

Throughout this blog, we’ll explore how Pandas simplifies the handling of datetime data, learn about resampling and frequency conversion techniques, harness the magic of rolling statistics and moving averages, and discover the art of time-based indexing and slicing. By the end, you’ll have a comprehensive understanding of how to leverage Pandas for effective time series analysis, enabling you to make informed decisions and uncover hidden patterns in your temporal data. Let’s embark on this journey into the world of time series analysis with Pandas!

Time and dates in pandas, a sequential collection of observations recorded at regular intervals, plays a vital role in numerous domains. Whether it’s tracking stock prices in finance, monitoring temperature changes in climate science, or studying customer behavior in marketing, time series data provides valuable insights into how things change over time.

In this section, we’ll explore the significance of times and dates in pandas across various fields and industries. We’ll also highlight the crucial role that Pandas, a powerful Python library, plays in the handling and analysis of time-based data. With Pandas, you’ll gain the ability to efficiently manipulate, visualize, and extract meaningful information from time series datasets. Let’s dive into the world of time series analysis and discover the endless possibilities it offers with Pandas at your side.

Pandas is a versatile and widely-used library in the field of data analysis and manipulation, and its significance becomes even more pronounced when it comes to handling and analyzing time-based data. Here are some key reasons why Pandas is essential for working with time series data:

Pandas provides data structures like Series and DataFrame, which are highly optimized for time series operations. These structures allow for easy organization, manipulation, and analysis of time-based data.

Pandas has robust support for datetime objects, making it effortless to work with dates and times. It provides powerful functions for parsing, formatting, and performing arithmetic operations on datetime data.

Pandas offers resampling methods that simplify tasks like downsampling (reducing data to a lower frequency) and upsampling (increasing data to a higher frequency). This is crucial for aligning data with different time frequencies.

Time series often require rolling statistics like moving averages or rolling sums to identify trends and patterns. Pandas provides convenient rolling window operations for this purpose.

In Pandas, you can set datetime columns as the index of a DataFrame, allowing for efficient time-based slicing, filtering, and grouping.

Pandas integrates seamlessly with visualization libraries like Matplotlib and Seaborn, enabling the creation of insightful time series plots and charts.

Time-based data often needs transformation, such as shifting or differencing to make it stationary for analysis. Pandas offers functions for these operations.

Handling missing values is common in time series data. Pandas provides methods to interpolate or fill missing data points accurately.

Pandas can align data from different sources based on timestamps, making it easier to combine and analyze datasets from various origins.

In summary, Pandas simplifies the complexities of times and dates, making it accessible to data analysts, scientists, and engineers. Its comprehensive functionality, efficient data structures, and seamless integration with other Python libraries make it an indispensable tool for anyone working with time-based data analysis.

Handling datetime data is a fundamental aspect of date and time in pandas, and Pandas provides robust tools and functionalities to manage datetime data efficiently. In this section, we will delve into how Pandas handles datetime data, covering the following key topics:

Pandas introduces the datetime64 data type, a versatile representation of datetime objects. This data type offers several advantages:

Precision: datetime64 supports nanosecond-level precision, allowing for accurate representation of timestamps.

Efficiency: It is memory-efficient and performs datetime operations quickly.

Compatibility: Pandas datetime64 is compatible with NumPy’s datetime64, facilitating seamless integration with other scientific computing libraries.

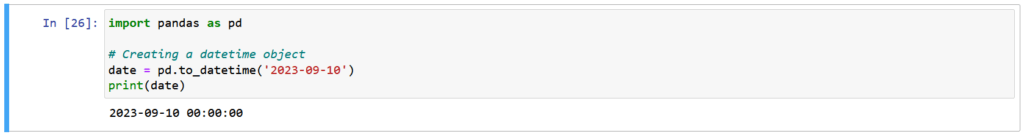

Let’s begin with a basic example of creating datetime objects using Pandas:

Here, we used the pd.to_datetime() function to convert a string to a datetime64 object.

Parsing and formatting datetime data are crucial tasks when working with time series data in Pandas. Pandas simplifies these operations, making it easy to convert between datetime strings and datetime objects, as well as format datetime objects as strings.

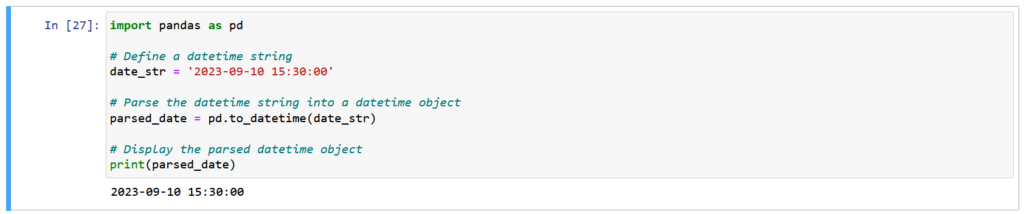

Parsing refers to the conversion of datetime strings into datetime objects, allowing for easy manipulation and analysis. Pandas provides the pd.to_datetime() function to perform this conversion:

In this example, pd.to_datetime() is used to convert the date_str variable into a datetime64 object. The resulting parsed_date variable holds the datetime object, which can be further processed and analyzed.

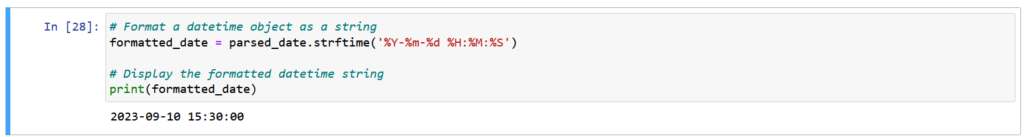

Formatting datetime data is the process of converting datetime objects into human-readable string representations. Pandas offers the strftime() method to achieve this, where you specify the desired format using format codes:

Here, the strftime() method is applied to the parsed_date object, which formats it as a string with the specified format %Y-%m-%d %H:%M:%S, representing the year, month, day, hour, minute, and second.

Understanding format codes is essential when using strftime() to customize the output format. Each format code corresponds to a specific datetime component (e.g., year, month, day) and is represented by a character. For example:

%Y: Year with century as a decimal number (e.g., 2023).%m: Month as a zero-padded decimal number (e.g., 09 for September).%d: Day of the month as a zero-padded decimal number (e.g., 10).%H: Hour (24-hour clock) as a zero-padded decimal number (e.g., 15).%M: Minute as a zero-padded decimal number (e.g., 30).%S: Second as a zero-padded decimal number (e.g., 00).By utilizing pd.to_datetime() and strftime(), you can seamlessly convert between datetime strings and datetime objects, allowing for efficient datetime data manipulation and visualization in Pandas. This capability is especially valuable when dealing with time series datasets, where precise timestamp handling is essential.

Pandas is a versatile library for working with date and time data. It provides an array of datetime-related operations to facilitate tasks in time series analysis, data manipulation, and more. In this section, we’ll explore some common datetime operations in Pandas, complete with practical examples.

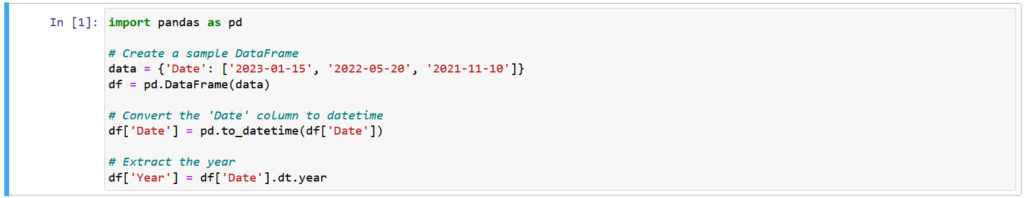

In time series data analysis, it’s often essential to work with specific components of datetime objects like year, month, day, and more. Pandas simplifies this task by providing an array of methods to extract these components. Let’s explore how to extract the year from a datetime column in Pandas, using a practical example:

Here’s a step-by-step explanation of what’s happening:

We start by importing the Pandas library, typically done at the beginning of your Python script or Jupyter Notebook.

We create a sample DataFrame called ‘df’ containing a ‘Date’ column. The ‘Date’ column contains date strings in the ‘YYYY-MM-DD’ format.

To work with datetime data in Pandas, we need to convert the ‘Date’ column to datetime objects. We use the pd.to_datetime() function to perform this conversion, replacing the original column with datetime values.

Once the column is converted, we can leverage the .dt accessor in Pandas to access various datetime components. In our example, we use .dt.year to extract the year component from each datetime value. The result is stored in a new ‘Year’ column.

This creates a DataFrame with an additional ‘Year’ column, displaying the extracted year for each date in the ‘Date’ column. Pandas provides similar methods like .dt.month, .dt.day, and more, enabling you to extract various date components effortlessly. These operations are particularly useful for time series analysis and reporting when insights are often derived from specific date details.

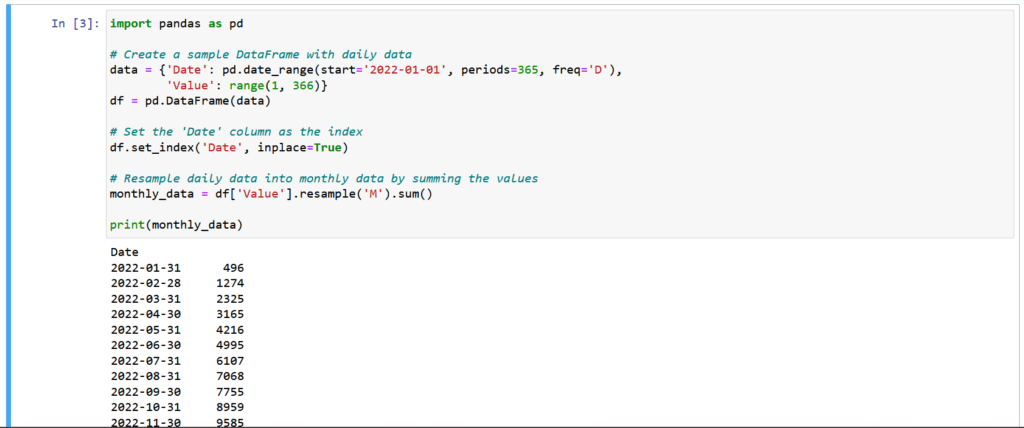

Time series data often come in high-frequency formats, such as daily data, which may need to be aggregated or transformed to a lower frequency for various analytical purposes. Pandas provides a powerful resampling feature that allows you to resample and aggregate time series data with ease. Let’s dive into how you can resample daily data into monthly data using Pandas:

We begin by importing the Pandas library, which is the go-to tool for working with time series data in Python.

We create a sample DataFrame called ‘df,’ which simulates daily data for a year. The ‘Date’ column is generated using the pd.date_range() function, creating a range of dates from January 1, 2022, to December 31, 2022, at a daily frequency.

To perform time series operations effectively, it’s a good practice to set the ‘Date’ column as the DataFrame’s index. This can be achieved using the .set_index() method, as shown in the code.

Now comes the resampling part. In our example, we’re resampling daily data into monthly data by summing the values within each month. The operation is performed on the ‘Value’ column using .resample('M').sum(). The 'M' frequency parameter specifies that we want to resample to a monthly frequency, and the sum() method aggregates the data by summing up values within each month.

The result, stored in the ‘monthly_data’ variable, is a new Series with a monthly frequency, summarizing the daily data into monthly values. This resampling capability in Pandas is essential for various applications, from simplifying data for visualization to performing trend analysis at different time scales.

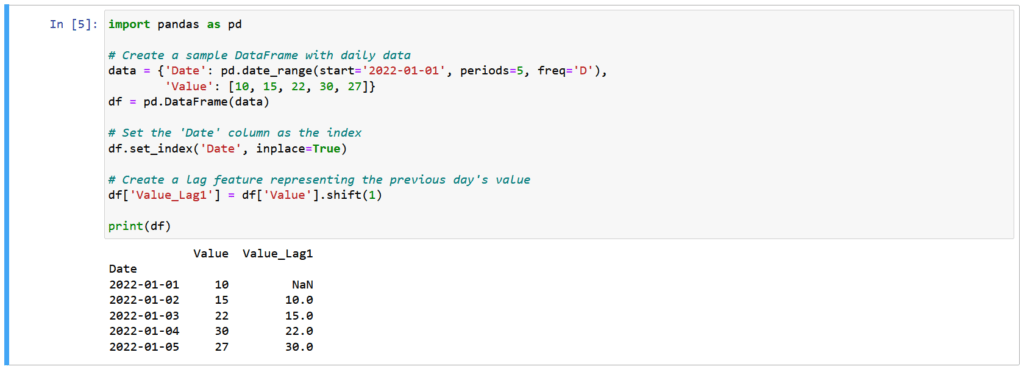

In time series analysis, it’s often necessary to shift or lag data points backward or forward in time. This can be useful for creating features that represent past or future values of a time series. Pandas provides a straightforward way to achieve this. Let’s explore how to create a lag feature by shifting the data:

We import Pandas and create a sample DataFrame called ‘df’ with daily data. The DataFrame contains two columns: ‘Date’ and ‘Value.’

To work effectively with time series data, we set the ‘Date’ column as the index using the .set_index() method.

The crucial part is creating a lag feature. In this example, we generate a new column, ‘Value_Lag1,’ representing the previous day’s (‘lagged’) values of the ‘Value’ column. This is achieved using .shift(1), where the argument ‘1’ specifies that we want to shift the data one step backward.

The resulting DataFrame now includes the ‘Value_Lag1’ column, which contains the lagged values of the ‘Value’ column. You can modify the shift value to create lag features for different time intervals. Shifting is a fundamental operation in time series analysis, as it allows you to compare current data points with past observations, making it easier to identify trends and patterns in your data.

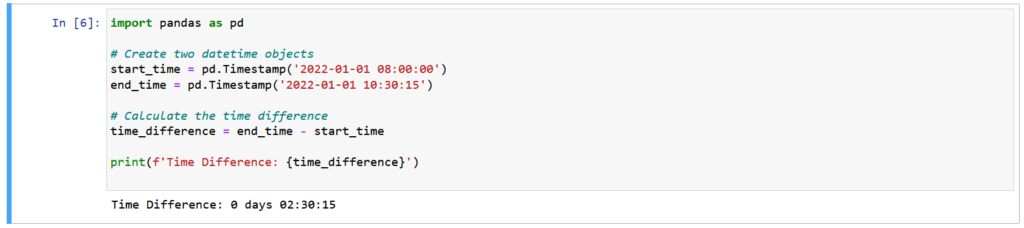

In time series analysis, calculating time differences between two datetime objects is a common task. This allows us to measure time intervals, which can be valuable in various applications. Pandas makes it easy to perform such calculations. Let’s see how to calculate the time difference between two datetime objects:

We start by importing Pandas and creating two datetime objects: ‘start_time’ and ‘end_time.’ These objects represent specific timestamps.

To calculate the time difference, we simply subtract ‘start_time’ from ‘end_time.’ This operation returns a new datetime object representing the duration between the two timestamps. This duration includes information about days, hours, minutes, seconds, and microseconds.

Finally, we print the time difference to the console.

Pandas provides a straightforward way to calculate time differences, making it practical for applications like measuring the time between two events, tracking the duration of processes, or analyzing time intervals within time series data. Time differences can offer insights into patterns and trends, especially when dealing with sequential data.

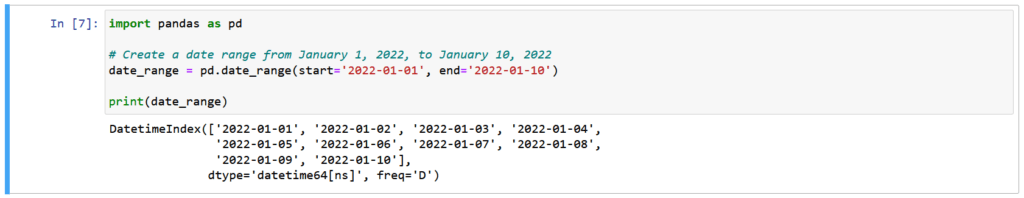

In time series analysis, creating date ranges is a common task, especially when setting up time-based indices for your data. Pandas offers a convenient way to generate date ranges. Here’s how you can do it:

We begin by importing Pandas.

The pd.date_range() function is used to create a date range. In this example, we specify the start date as ‘2022-01-01’ and the end date as ‘2022-01-10’. This function generates a range of dates, including both the start and end dates.

The resulting date_range is a Pandas DatetimeIndex object that contains all the dates within the specified range.

We print the date_range to the console.

Creating date ranges is invaluable when you want to set up a time-based index for your time series data. It simplifies the process of working with temporal data, allowing you to easily access and manipulate your data points according to specific dates or time intervals. Whether you are dealing with daily, monthly, or yearly data, Pandas makes it straightforward to create the date ranges you need for your analysis.

In this section, we’ve explored the world of time series analysis with Pandas. We discussed the significance of time series data and how Pandas facilitates its handling and analysis. We’ve learned about datetime data management, including parsing, formatting, and common operations.

We also covered resampling, shifting, calculating time differences, and creating date ranges. These skills are essential for gaining insights and making data-driven decisions.

As we continue, we’ll delve deeper into time series analysis with Pandas, uncovering advanced techniques for deeper insights. Stay tuned for more! And follow 1stepgrow.

We provide online certification in Data Science and AI, Digital Marketing, Data Analytics with a job guarantee program. For more information, contact us today!

Courses

1stepGrow

Anaconda | Jupyter Notebook | Git & GitHub (Version Control Systems) | Python Programming Language | R Programming Langauage | Linear Algebra & Statistics | ANOVA | Hypothesis Testing | Machine Learning | Data Cleaning | Data Wrangling | Feature Engineering | Exploratory Data Analytics (EDA) | ML Algorithms | Linear Regression | Logistic Regression | Decision Tree | Random Forest | Bagging & Boosting | PCA | SVM | Time Series Analysis | Natural Language Processing (NLP) | NLTK | Deep Learning | Neural Networks | Computer Vision | Reinforcement Learning | ANN | CNN | RNN | LSTM | Facebook Prophet | SQL | MongoDB | Advance Excel for Data Science | BI Tools | Tableau | Power BI | Big Data | Hadoop | Apache Spark | Azure Datalake | Cloud Deployment | AWS | GCP | AGILE & SCRUM | Data Science Capstone Projects | ML Capstone Projects | AI Capstone Projects | Domain Training | Business Analytics

WordPress | Elementor | On-Page SEO | Off-Page SEO | Technical SEO | Content SEO | SEM | PPC | Social Media Marketing | Email Marketing | Inbound Marketing | Web Analytics | Facebook Marketing | Mobile App Marketing | Content Marketing | YouTube Marketing | Google My Business (GMB) | CRM | Affiliate Marketing | Influencer Marketing | WordPress Website Development | AI in Digital Marketing | Portfolio Creation for Digital Marketing profile | Digital Marketing Capstone Projects

Jupyter Notebook | Git & GitHub | Python | Linear Algebra & Statistics | ANOVA | Hypothesis Testing | Machine Learning | Data Cleaning | Data Wrangling | Feature Engineering | Exploratory Data Analytics (EDA) | ML Algorithms | Linear Regression | Logistic Regression | Decision Tree | Random Forest | Bagging & Boosting | PCA | SVM | Time Series Analysis | Natural Language Processing (NLP) | NLTK | SQL | MongoDB | Advance Excel for Data Science | Alteryx | BI Tools | Tableau | Power BI | Big Data | Hadoop | Apache Spark | Azure Datalake | Cloud Deployment | AWS | GCP | AGILE & SCRUM | Data Analytics Capstone Projects

Anjanapura | Arekere | Basavanagudi | Basaveshwara Nagar | Begur | Bellandur | Bommanahalli | Bommasandra | BTM Layout | CV Raman Nagar | Electronic City | Girinagar | Gottigere | Hebbal | Hoodi | HSR Layout | Hulimavu | Indira Nagar | Jalahalli | Jayanagar | J. P. Nagar | Kamakshipalya | Kalyan Nagar | Kammanahalli | Kengeri | Koramangala | Kothnur | Krishnarajapuram | Kumaraswamy Layout | Lingarajapuram | Mahadevapura | Mahalakshmi Layout | Malleshwaram | Marathahalli | Mathikere | Nagarbhavi | Nandini Layout | Nayandahalli | Padmanabhanagar | Peenya | Pete Area | Rajaji Nagar | Rajarajeshwari Nagar | Ramamurthy Nagar | R. T. Nagar | Sadashivanagar | Seshadripuram | Shivajinagar | Ulsoor | Uttarahalli | Varthur | Vasanth Nagar | Vidyaranyapura | Vijayanagar | White Field | Yelahanka | Yeshwanthpur

Mumbai | Pune | Nagpur | Delhi | Gurugram | Chennai | Hyderabad | Coimbatore | Bhubaneswar | Kolkata | Indore | Jaipur and More